Alright,

If you've been following our little journey here on the internet, you'd know that we've been tinkering with UE5 + AI workflows for the last few years.

Why, you ask?

Well, there's a particular game-engine stink that really messes with the exciting adventure of animating in UE5. We figured an AI "filter" pass would help with that.

Problem? There's a very slight, but very obvious flicker to the frame. Or at least... there as been.

Up until this week our workflow entailed exporting our UE5 animations as frames. We would then run those frames through a batched upscale of Stable Diffusion using the prompt, "Cinematic Film Still" with a denoise of 0.8. We would then layer that AI pass ontop of our UE5 frames and find a nice middle ground that didn't completely destroy our eyeballs.

The problem, of course, was there was still that constant flicker.

While we grew to find it endearing for our various screen tests, we knew that this wasn't where we wanted to be when we started producing longer short films and webseries.

Solution?

Wait.... wait for the technology to catch up.

And this week my friends...we think we figured out a solution.

WAN2.1 + WanFUN + WanVACE.....

We gave ourselves 3 days to sort out whether or not this was a viable approach to our frame 2 frame workflow and we are happy to report that YES.....yes it is.

Special shout-out to GoshniiAI and MDMZ for their incredible tutorials that helped us get there.

The secret ingredient we needed to add to really lock in the frame-to-frame aspect of our tests was to add a Pyracanny to the workflows.

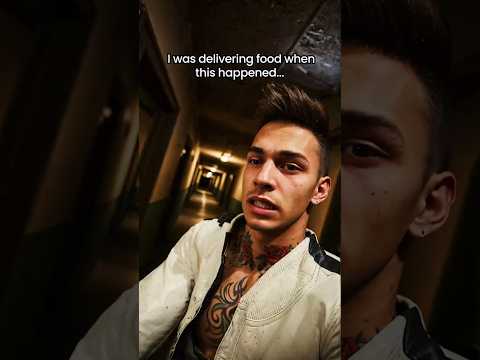

And BAM, we've got a fun adventure coming as we develop our first 10-minute short film. It's our Proof of Concept for the "Monster Sponsor" project we've been developing these last few months.

We are looking forward to implementing this technique in the coming week.

Stay tuned.

PS: Been watching some of Matt Hallet Visual's work in the VACE space and his tips are also pretty helpful in understanding whats going on under the hood.